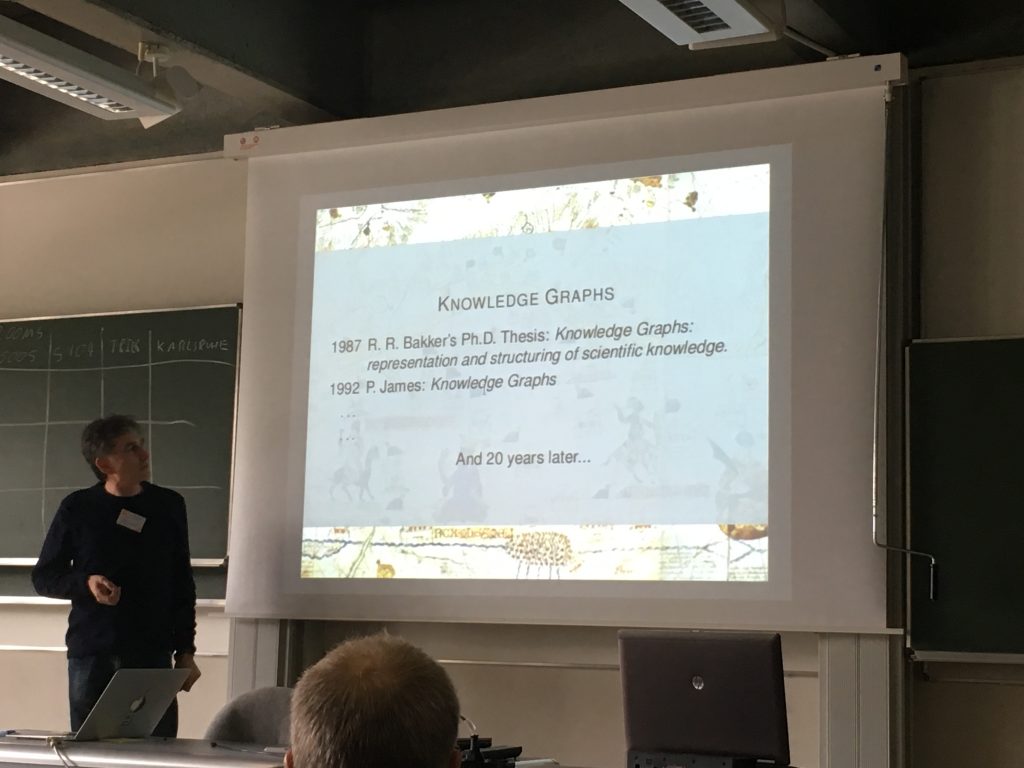

This week, March 4-6 2019, was the W3C Graph Data Workshop – Creating Bridges: RDF, Property Graph and SQL

Kicking off the @w3c workshop on graph data. This should be an interesting event. pic.twitter.com/VnTTH8MaRf

— Juan Sequeda (@juansequeda) March 4, 2019

IF there is a consensus within the community that we need to standardize mappings between Property Graphs and RDF Graphs THEN this will have been a successful meeting.

– There is interest to standardize a Property Graph data model with a schema.

Brad Bebee’s Keynote

“When a customer thinks about graph, they think about what they draw on the whiteboard. The customer is NOT thinking about graph frameworks (PG vs RDF)” – paraphrasing @b2ebs

AGREE! That has been our view with https://t.co/XXFqdJgxAR: it’s just a graph! #W3CGraphWorkshop

— Juan Sequeda (@juansequeda) March 4, 2019

“Use graphs to link information together to transform the business. Link things that were never connected before. This is really exciting.“

#W3CGraphWorkshop @b2ebs’s Keynote

– Neptune seems to be favorite amazon product launch of 2018. People love graphs

– “Graph let’s me integrate data like crazy”

– View market as customers who could benefit from graphs

– Devs from RDB find PG natural. Info arch find RDF natural pic.twitter.com/6tJDy0FKDz— Juan Sequeda (@juansequeda) March 4, 2019

Coexistence or Competition

After discussions about how standardization works within W3C and ISO, there was a mini panel session on “Coexistence or Competition” with Olaf Hartig, Alastair Green and Peter Eisentraut. The take aways:

#W3CGraphWorkshop @olafhartig points

– Coexistence is unavoidable. This will lead to competition. Those who embrace coexistence, will be the ones that succeed

– We need well defined approaches, not ad-hoc implementations

– Don’t reinvent. Reuse & extend. Example: RDF* & SPARQL* pic.twitter.com/MMGFNWFoZq— Juan Sequeda (@juansequeda) March 4, 2019

Peter Eisentraut/Postgres Take away message:

– creating a query language is really hard. Be careful.

– NoSQL came along but they never generated a query language. Just APIs.

– Where are the updates?? This is usually half of the operations #W3CGraphWorkshop— Juan Sequeda (@juansequeda) March 4, 2019

#W3CGraphWorkshop Alastair Green @neo4j:

– It’s always hard to agree. It’s a social process!

– PG has to get organized. A lot going on: openCypher, PGQL, SQL/PGQ, G-CORE. RDF seems to be more organized.

– Cooperate to define reasonable interoperation standards. pic.twitter.com/uEpZg4Jyd4— Juan Sequeda (@juansequeda) March 4, 2019

Lightning talks

The day ended with over 25 lightning talks. The moderators were excellent time keepers. The two main themes that caught my attention were the following:

The second day consisted of three simultaneous tracks: Interoperation, Problems & Opportunities and Standards Evolution for a total of 12 sessions. By coincidence (?), all the sessions I was interested were in the Interoperation track.

Graph Data Interchange

I attended the #W3CGraphWorkshop Graph Data Interchange session. The outcome was interest on the following:

– how can JSON-LD support PG

– a default mapping and mapping language that does RDF<->PG

– @olafhartig’s RDF*/SPARQL* should be submitted as a W3C member submission— Juan Sequeda (@juansequeda) March 5, 2019

Graph Query Interoperation

#W3CGraphWorkshop Graph Query Interoperation session my takeaways

– @AndySeaborne important to understand the output for interoperability

– @olafhartig Query interoperability means that we need to have an interoperability of data models. we don’t even have that yet.

1/3— Juan Sequeda (@juansequeda) March 5, 2019

– existing systems that do GraphQL to SPARQL, SPARQL to gremlin, Cypher to gremlin, however without well defined mappings, how do we know they are “correct”

– my position: we need to define the mappings!!! And need to understand query preservation

2/3— Juan Sequeda (@juansequeda) March 5, 2019

– there is interest to spend time 1) defining a standard abstract PG data model 2) define a direct mapping and customizable mapping between PG-RDF.

– Some interest in defining mappings from SQL to PG.

– Could this work be done @w3c?

3/3— Juan Sequeda (@juansequeda) March 5, 2019

Specifying a Standard

#W3CGraphWorkshop Specifying a Standard session:

– Libkin: Standard should be written 1) natural language 2) denotational semantics 3) reference implementation

– who does the semantic work? academics! But they don’t get credit. This needs to be addressed

(1/3)— Juan Sequeda (@juansequeda) March 5, 2019

– positive experience between U. Edinburgh/Neo4j to define formal semantics of Cypher

– Good idea to have industry work with academia … and invest in academia#W3CGraphWorkshop (3/3)— Juan Sequeda (@juansequeda) March 5, 2019

I find it very cool that Filip Murlak and colleagues defined a formal, readable, and executable semantics of Cypher in Prolog which is based on the formal semantics defined by the folks from U. of Edinburgh. This reminds me when I took a course with JC Browne on Verification and Validation of Software Systems and learned about Tony Hoare’s and Jay Misra’s Verification Grand Challenge.

Finally, Andy Seaborne made a very important point:

Tests suites and formal semantics are not either-or. They reach different audiences. If you want wide spread implementation, then test suites work well and provide a way to get implement reports/evaluations.#W3CGraphWorkshop

— Andy Seaborne (@AndySeaborne) March 6, 2019

Graph Schema

Happy to share what the Property Graph Schema Working Group has been working on for a few months.

Slides https://t.co/7TzSMPobnq

Industry Survey https://t.co/MdfK1fL2wi

Use Case & Requirements https://t.co/4ZuCy6zT6Z

Academic Survey https://t.co/J7rZYioIHG #W3CGraphWorkshop— Juan Sequeda (@juansequeda) March 4, 2019

#W3CGraphWorkshop Graph schema session: a lot of fantastic discussion. We made a long list of desired features. The topic that continued to show up was… KEEP IT SIMPLE S….

— Juan Sequeda (@juansequeda) March 5, 2019

After the #W3CGraphWorkshop, the Property Graph Schema Working Group stayed for a full day f2f meeting. Great progress! And glad to see that https://t.co/XXFqdJy8sp is useful! pic.twitter.com/w4IOG5rFn3

— Juan Sequeda (@juansequeda) March 8, 2019

Up to now, the PGSWG has been informal. There was a consensus that it should gain some sort of formality by becoming a task force within the Linked Data Benchmark Council (LDBC). More info soon!

What are the next steps?

#W3CGraphWorkshop My proposal around incubation/standardization next steps efforts

– RDF*/SPARQL* should be a @w3c member submission

– Abstract Property Graph Model/Schema

– Direct & customizable Mappings between RDF and Property Graph

– Extend JSON-LD to support Property Graph— Juan Sequeda (@juansequeda) March 6, 2019

Lack of Diversity

Photos from the #W3CGraphWorkshop… observation: how come more women didn't attend? I am sure it wasn't intentional. So the general question is…where are the women developers who are interested in and working on these kinds of @w3c initiatives? https://t.co/dGDzAICBLT

— Margaret Warren (@ImageSnippets) March 6, 2019

– Marlène Hildebrand: This is the first time I met Marlène. She is at EPFL working on data integration using RDF, so we discussed a lot about converting different sources to RDF, mappings and methodologies on how to create ontologies and mappings.

Final quick notes

Great to meet up again with @cygri, one of the other co-editors of the W3C Relational Database to RDF Mapping standard, specially when one of the constant topics during the #W3CGraphWorkshop was mappings, mappings and mappings. pic.twitter.com/k9eGnoRl81

— Juan Sequeda (@juansequeda) March 8, 2019

– Adrian Gschwend has his summary in a twitter thread:

My interpretation of the W3C Graph Workshop wrap-up session today in Berlin. Sorry for the ones I left out, I focus on the ones I participated/understood, mostly around RDF. Feel free to contribute! Sessions/minutes are linked here: https://t.co/P3oV4Xshwr #W3CGraphWorkshop 👇

— Adrian Gschwend (@linkedktk) March 6, 2019

– Gautier Poupeau has his summary in a twitter thread in french:

A Berlin @w3C organise une conf sur l'avenir du graphe et son interopérabilité entre RDF, Property Graph, GraphQL et autre Graphe en SQL, le hashtag à suivre est #W3CGraphWorkshop ⬇⬇ #thread ⬇⬇

— Gautier Poupeau (@lespetitescases) March 4, 2019

– Find a lot more tweets by searching for the the #W3CGraphWorkshop hashtag.